AI × Opt. in Power Systems — Part 1: OPF

How differentiable optimization enables AI to learn feasible multi-period dispatch for power system operations.

⚡ AI × Optimization in Power Systems — Part 1: OPF

A simple guide to how AI learns feasible multi-period dispatch decisions.

This is the first post in our two-part series on how AI and optimization come together to solve challenging power system problems.

- Part 1 (this post): AI for OPF using differentiable optimization

- Part 2: AI for OTS (optimal transmission switching) using differentiable optimization

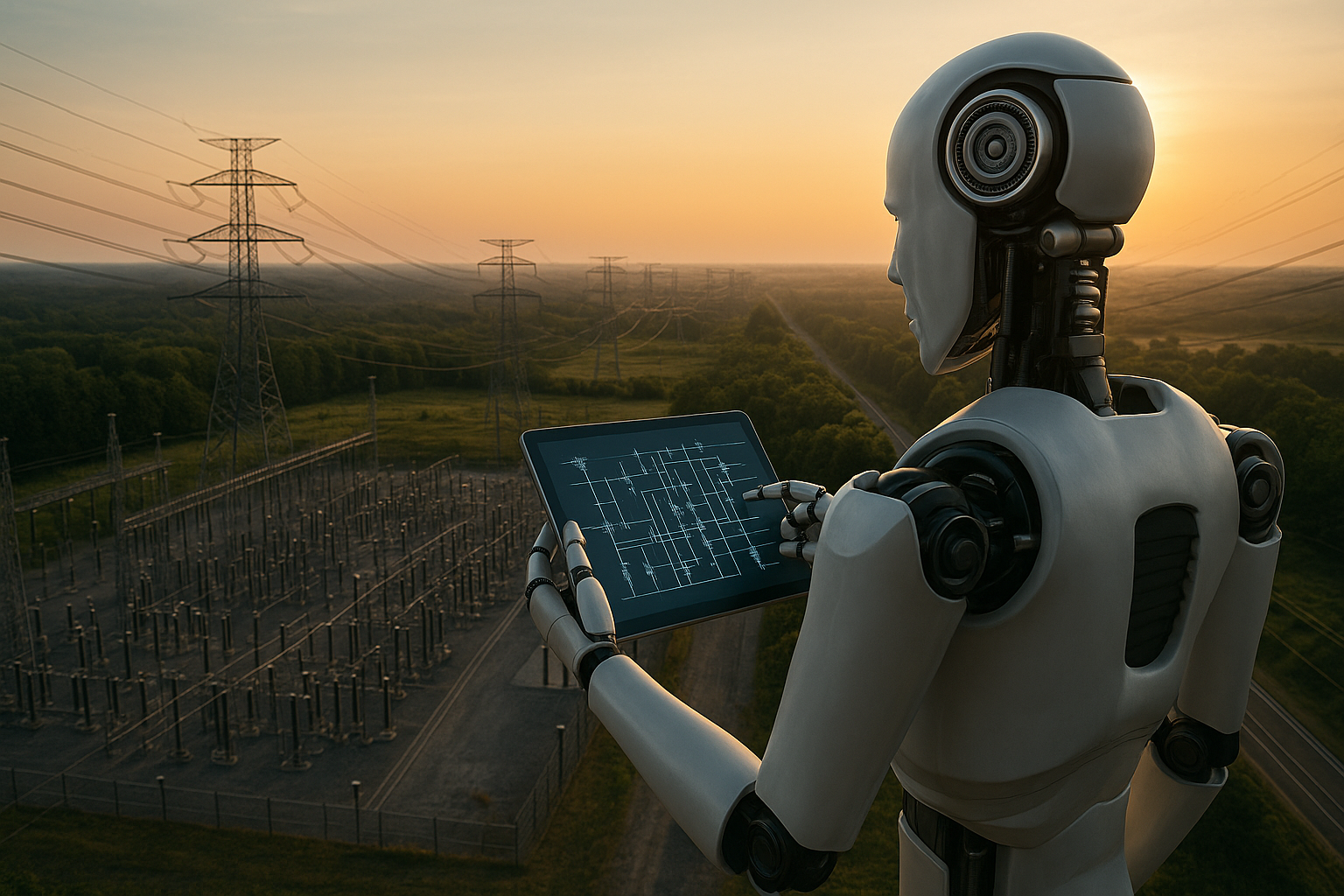

🔍 Why OPF is Hard — Especially Over Time

Optimal Power Flow (OPF) decides how generators should produce electricity while respecting:

- power balance

- generator limits

- line limits

- economic costs

Traditional OPF solvers work well for a single moment, but power systems operate across multiple hours. This introduces:

- generator ramping limits

- energy storage charging/discharging

- minimum/maximum state-of-charge

- inter-temporal dependencies

Suddenly, OPF becomes a multi-period problem—significantly harder and more time-consuming to solve.

AI seems promising for speeding this up…

but there is a problem.

❗ Why Ordinary AI Fails at OPF

Figure 1. Failure of AI models in solving optimization problems.

A typical neural network:

- may produce dispatch values that violate constraints

- does not understand ramping or power balance

- cannot guarantee feasibility

- requires thousands of labeled OPF solutions

But labels are expensive because each requires solving a full optimization problem.

We need AI that:

✔ obeys physics

✔ respects constraints

✔ works without labels

✔ generalizes across time

This leads us to the key idea of this series.

🧠 Differentiable Optimization — The Bridge Between AI and Power System Physics

Why differentiable optimization matters

Traditional neural networks learn by comparing predictions to labels.

But in power systems, feasibility is non-negotiable:

- You cannot violate ramp rates.

- You cannot violate generator limits.

- You cannot violate power balance.

A model that ignores these rules is unusable in real operations.

Differentiable optimization solves this.

It allows an optimization problem—like OPF—to become part of the neural network itself.

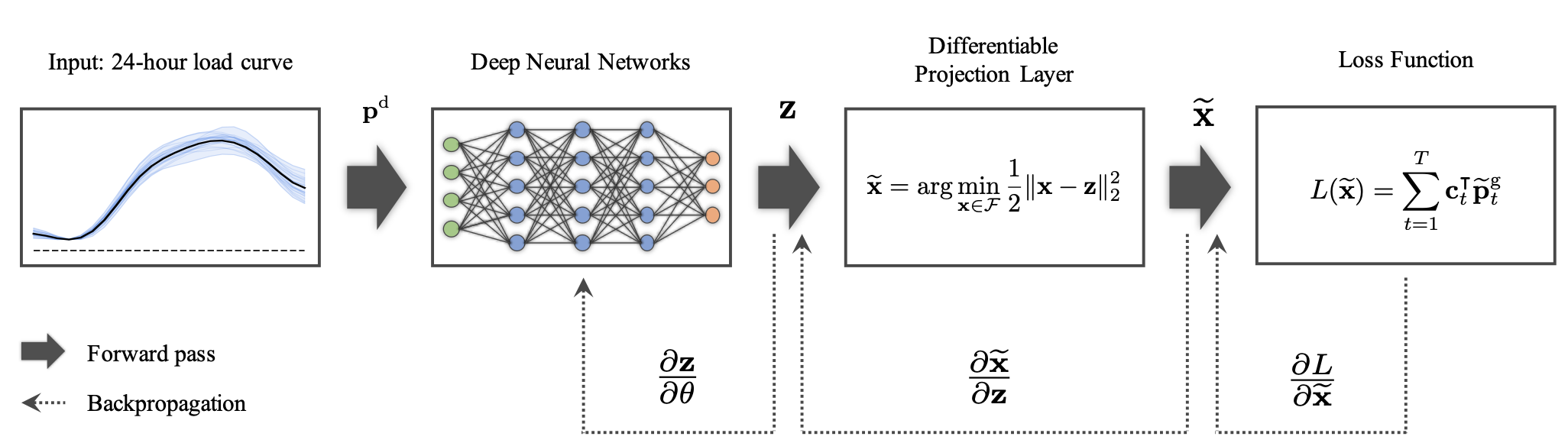

How it works (simple explanation)

Think of it like this:

The neural network makes a guess.

The optimization layer fixes the guess.

The neural network learns from the fix.

This means the model learns directly from physics and constraints, not from labels.

During training:

- The neural network outputs a raw dispatch.

- A projection/OPF layer adjusts it to the nearest feasible dispatch.

- The loss (cost) is computed using this feasible result.

- Gradients flow backward through the optimization layer.

Result:

✔ Every training step is feasible

✔ No labeled data required

✔ The network learns how to satisfy constraints on its own

This is the foundation of MPA-DNN, the focus of this post.

🤖 Introducing MPA-DNN

A differentiable-optimization-based AI model for multi-period OPF

MPA-DNN (Multi-Period Projection-Aware Deep Neural Network) is built entirely around this idea.

Figure 2. Conceptual overview of MPA-DNN.

Here’s what it does:

1️⃣ Learns from load profiles — not labels

The model takes a 24-hour load curve as input and predicts raw generator schedules.

No OPF solutions are needed for training.

2️⃣ Uses a differentiable projection layer to enforce feasibility

The projection layer:

- enforces power balance

- enforces generator limits

- enforces ramping

- enforces storage SoC limits

It “projects” the neural network’s raw schedule onto the feasible set of multi-period OPF.

This guarantees that every dispatch is feasible through the entire day.

3️⃣ Trains using only generation cost

Since feasibility is handled by the projection layer, training reduces to:

Make the feasible dispatch cheaper.

This allows fully unsupervised training.

📈 What MPA-DNN Achieves

Tested on the IEEE 39-bus system with energy storage, MPA-DNN shows:

✔ Feasible dispatch — always

Unlike standard neural networks, MPA-DNN never violates:

- ramping

- generator limits

- SoC constraints

It works because feasibility is built into the learning process.

✔ Near-optimal operational cost

Across load variations, MPA-DNN achieves:

- very low MAE

- <0.03% optimality gap

- strong performance even under distribution shift

💡 Why This Matters

MPA-DNN demonstrates a new way to use AI in power systems:

- AI that respects physics

- AI that understands constraints

- AI that works without expensive labels

- AI that produces reliable dispatch schedules in real time

This is how AI and optimization come together to solve real power system problems.

🔗 Coming Next: Part 2 — OTS

In Part 2, we move from multi-period OPF to a much harder problem:

Optimal Transmission Switching (OTS) —

choosing the best network topology in real time.

OTS is NP-hard, slow to solve, and crucial for congestion relief.

We will show how Dispatch-Aware DNN (DA-DNN) uses differentiable OPF to learn feasible switching actions — again without labels.

Stay tuned.

📘 Reference

Kim, Yeomoon, Minsoo Kim, and Jip Kim. “MPA-DNN: Projection-Aware Unsupervised Learning for Multi-period DC-OPF.” arXiv preprint arXiv:2510.09349 (2025). [link]