AI × Opt. in Power Systems — Part 2: OTS

How AI and differentiable optimization enable real-time, feasible optimal transmission switching.

🔌 AI × Optimization in Power Systems — Part 2: OTS

A simple guide to how AI learns feasible switching decisions for real-time power system operation.

This post continues our series on AI × Optimization by moving from multi-period OPF to a much harder problem:

Optimal Transmission Switching (OTS) — choosing the best network topology.

🧭 What Is OTS, and Why Does It Matter?

Normally, power systems operate with a fixed network topology.

But some transmission lines can be switched (opened or closed) to help:

- relieve congestion

- redirect flows

- reduce operating cost

- improve reliability

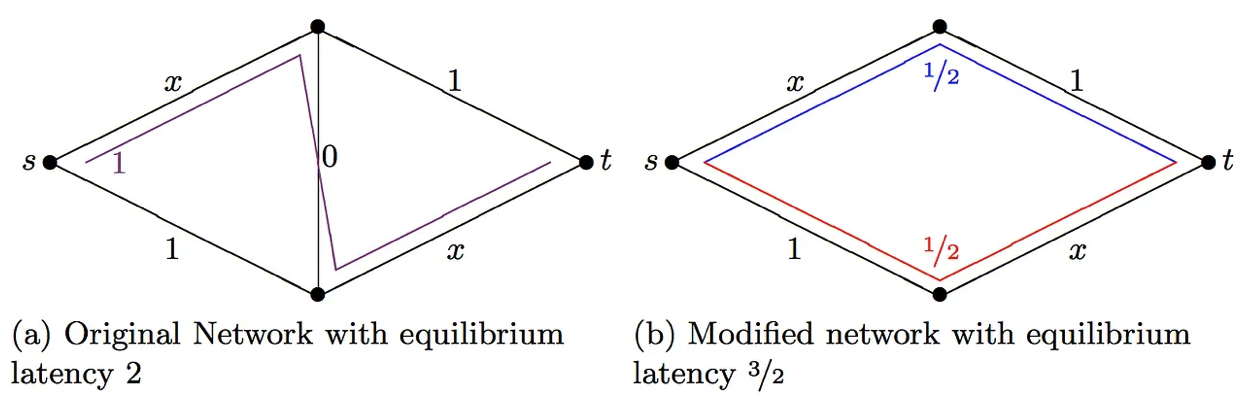

In many cases, opening a line can even reduce total cost — a counterintuitive effect known as Braess’s paradox.

Figure 1. Switching certain lines can reduce congestion and redirect flows more efficiently.(Image source: Medium)

Sounds useful, right?

There’s a problem.

❗ Why OTS Is Hard

OTS is incredibly computationally difficult because:

- every line is binary → on/off

- this creates a combinatorial explosion of possibilities

- traditional solvers (MILP) cannot solve large systems quickly

- some grid cases take hours or don’t solve at all

For real-time operations, we need switching decisions in milliseconds, not hours.

AI seems like a great solution…

but ordinary AI struggles here too.

⚠️ Why Ordinary AI Fails at OTS

A neural network trained to predict line switching:

- often outputs infeasible topologies

- violates power flow constraints

- breaks network connectivity

- may overload lines

- requires thousands of labeled OTS solutions (too expensive)

And without feasibility, such a model is not deployable.

We need AI that:

✔ handles binary decisions

✔ respects OPF constraints

✔ works without labels

✔ runs in real time

This brings us to our key tool — again — differentiable optimization.

🧠 Differentiable Optimization for Discrete Decisions

In Part 1, differentiable optimization helped AI learn continuous dispatch that respects physics.

In Part 2, we use it to help AI learn discrete topology decisions.

Here’s the idea:

- The neural network proposes line statuses (values between 0 and 1).

- An embedded DC-OPF layer checks whether this topology is physically feasible.

- The generation cost becomes the training signal.

- The neural network learns to propose topologies that lead to lower OPF cost.

- After training, we binarize (0/1) the line statuses for real operation.

Differentiable optimization ensures that:

- every training step is feasible

- the model learns from physics, not labels

- solutions remain safe when deployed

This is the core of DA-DNN.

🤖 Introducing DA-DNN

A dispatch-aware neural network for real-time OTS

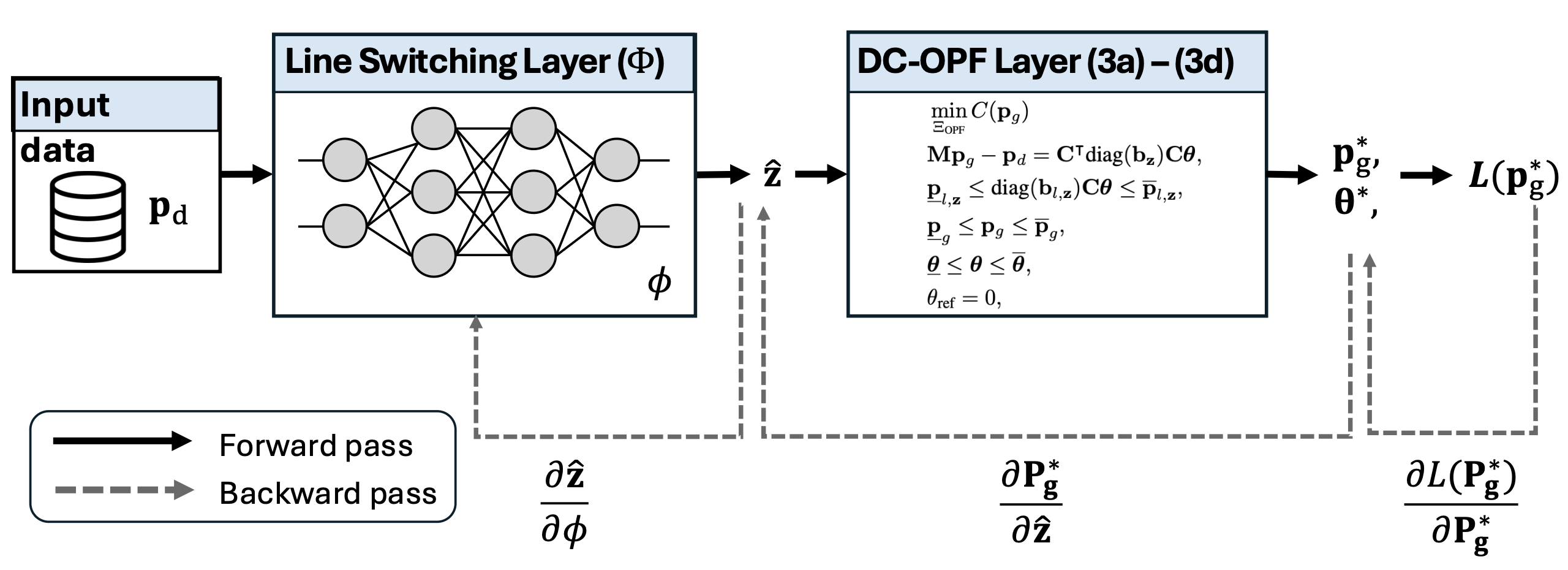

DA-DNN (Dispatch-Aware Deep Neural Network) embeds a differentiable DC-OPF solver inside the model.

Figure 2. DA-DNN: neural network predicts line statuses → OPF enforces constraints → cost drives learning.

It works in three steps:

1️⃣ The network predicts relaxed line statuses

Instead of hard 0/1 decisions, the model predicts values in

- values near 1 → keep the line

- values near 0 → open the line

This continuous relaxation allows gradients to flow.

2️⃣ The embedded OPF layer checks feasibility

Given the predicted topology, DC-OPF computes:

- generator dispatch

- voltage angles

- line flows

If the predicted topology is infeasible, OPF automatically adjusts things —

and the network learns how to avoid such mistakes.

3️⃣ Training uses only generation cost

No labels needed.

The model simply tries to make OPF cheaper:

Lower OPF cost → better switching decisions.

After training, line statuses are binarized and OPF is run one final time.

Total inference time = one DC-OPF solve (milliseconds).

📈 What DA-DNN Achieves

✔ Real-time feasibility

The embedded OPF layer ensures that, regardless of the predicted topology, the resulting dispatch is always feasible.

This is crucial for real-world operation.

✔ Lower cost than DC-OPF

Even though it runs as fast as DC-OPF, DA-DNN produces topologies that:

- reduce congestion

- unlock hidden flexibility

- lower total generation cost

✔ Scales to large networks

On the 300-bus system:

- DA-DNN found improved topologies in milliseconds

- Commercial OTS solvers failed to find any solution within 1 hour

This demonstrates real-time scalability at operational scale.

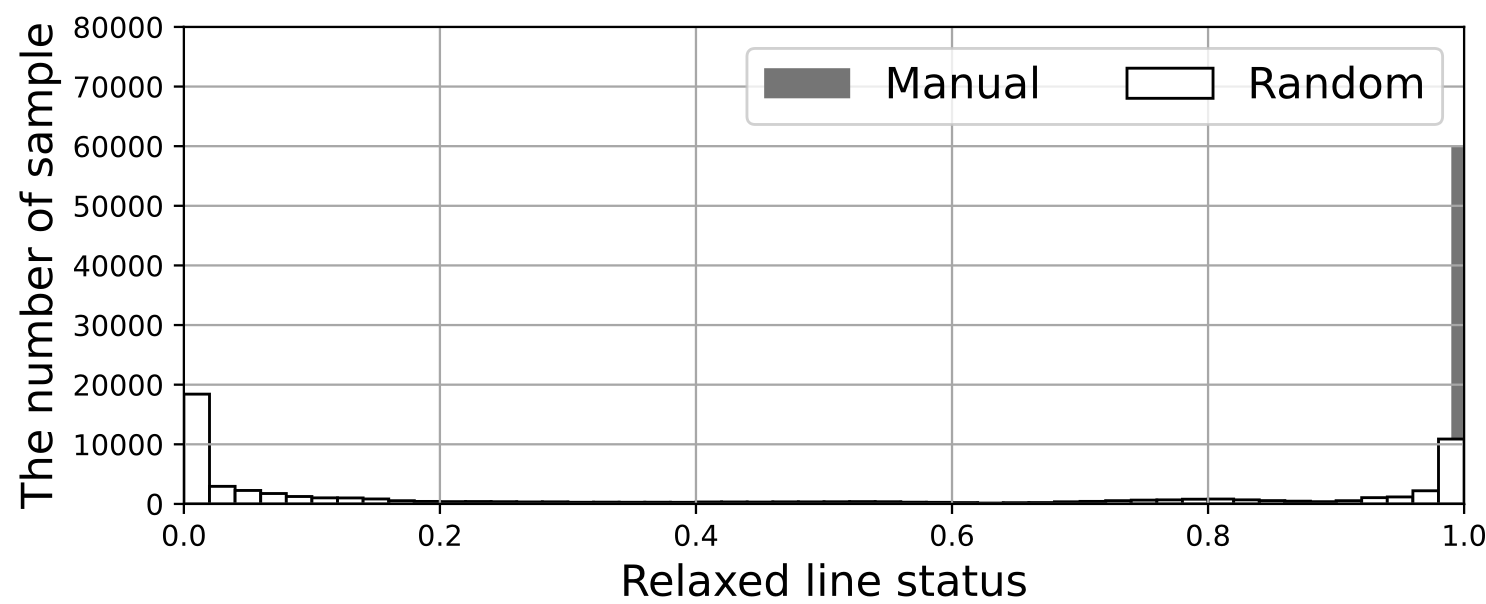

✔ Smooth training, thanks to smart initialization

DA-DNN uses a special initialization trick:

Figure 3. Histogram of the predicted relaxed line status values from untrained DA-DNN with different weight and bias initialization.

- start with all lines “closed”

- ensures OPF is feasible at the first iteration

- prevents training collapse

This allows stable learning even on large grids.

💡 Why This Matters

DA-DNN represents a new class of optimization-aware AI models:

- AI that never violates physical constraints

- AI that requires no labeled data

- AI that solves NP-hard problems in real time

- AI that can support operators during congestion, contingencies, and market operation

This is exactly the kind of AI needed for modern power systems: fast, feasible, and physics-aware.

🎉 End of Part 2 — What’s Next?

You now understand how differentiable optimization enables AI to:

- learn feasible multi-period dispatch (MPA-DNN)

- learn feasible switching decisions (DA-DNN)

If you want to extend this series, possible Part 3 topics include:

- AI for Security-Constrained OPF

- AI for Unit Commitment

- AI for system stability and resilience

- Practical tutorial: building differentiable optimization layers

📘 Reference

Kim, Minsoo, and Jip Kim. “Dispatch-Aware Deep Neural Network for Optimal Transmission Switching: Toward Real-Time and Feasibility Guaranteed Operation.” arXiv preprint arXiv:2507.17194 (2025). [link]